Canada’s AI Policy Tug-of-War

Four Futures for Canada’s AI Trajectory

Canada’s artificial intelligence (AI) is being shaped by three key policies that reflect competing priorities in governance and innovation. Together, they highlight the challenge of balancing rapid technological development with the need for ethical oversight.

The Artificial Intelligence and Data Act (AIDA), introduced in 2022 as part of Bill C-27, was designed to modernize AI governance. It proposed a risk-based regulatory framework, an AI and Data Commissioner to oversee compliance, and criminal provisions to deter malicious or reckless uses of AI. However, the prorogation of Parliament in 2025 halted AIDA’s progress, leaving Canada without a clear regulatory framework for emerging AI technologies. This delay creates uncertainty for businesses and leaves critical risks, such as algorithmic bias and malicious AI use, unaddressed.

Meanwhile, Canada continues to rely on the Personal Information Protection and Electronic Documents Act (PIPEDA), enacted in 2000, to manage privacy and data governance. While PIPEDA provides a foundation for regulating the collection and use of personal data, it was not designed to address the complexities of AI systems, such as transparency, accountability, and fairness. Without AIDA, PIPEDA remains insufficient to meet the demands of modern AI applications.

To address immediate infrastructure needs, the government has prioritized the Canadian Sovereign AI Compute Strategy, a $2 billion initiative aimed at building high-performance computing capacity for domestic AI research and development. This strategy strengthens Canada’s competitiveness by reducing reliance on foreign cloud providers and enabling businesses, researchers, and SMEs to access advanced computational resources. However, it does not address the legal or ethical challenges left unresolved by AIDA’s delay.

These three policies demonstrate Canada’s competing priorities: fostering innovation through infrastructure investment versus ensuring responsible AI development through governance. How Canada navigates this tension will define its role in the global AI ecosystem.

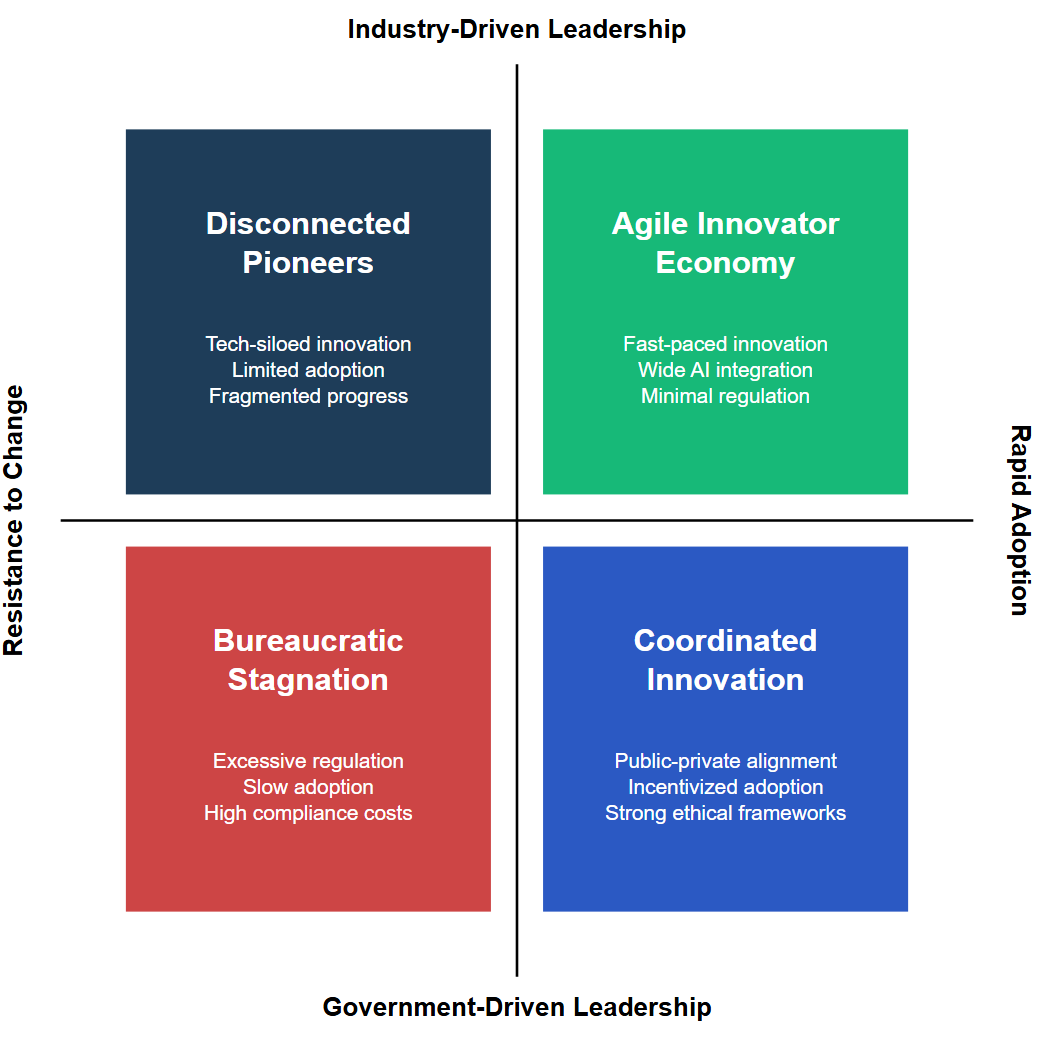

To explore the implications of Canada’s AI policies, let’s consider four potential futures. These scenarios outline the pathways the country may follow, shaped by opportunities, risks, and the interplay of key uncertainties.

A four futures analysis identifies two critical uncertainties and examines their interplay. For Canada’s AI policies, I have chosen these two uncertainties:

Leadership in AI Development: Will AI development be driven primarily by industry, prioritizing agility and market responsiveness, or by government, focusing on coordinated strategies and oversight?

Adoption of AI by Traditional Sectors: Will traditional sectors like healthcare, energy, and agriculture rapidly embrace AI solutions, or will they resist due to costs, infrastructure challenges, or skepticism?

These uncertainties create a matrix with four futures:

Future 1: Agile Innovator Economy (Industry-Driven, Rapid Adoption)

Description

AI development is led by private industry, resulting in fast-paced innovation and agile responses to market demands. Traditional sectors quickly adopt AI solutions due to the efficiency and competitiveness they offer, creating an innovation-driven economy.

Key Features

Industry establishes the direction of AI innovation, focusing on profitability and real-world applications.

AI is widely integrated into traditional sectors like healthcare, manufacturing, and agriculture.

Regulation remains minimal, allowing companies to experiment freely but with risks of ethical oversight gaps.

Outcomes

Economic Growth: Rapid expansion of AI industries and adoption across traditional sectors.

Reputation: Seen as a hub for agile and market-driven innovation.

Risks: Ethical lapses and lack of standardized governance could lead to public backlash or long-term instability.

Future 2: Disconnected Pioneers (Industry-Driven, Resistance to Change)

Description

Private industry drives AI innovation, but traditional sectors resist adoption, either due to cost concerns, infrastructure limitations, or skepticism. AI advancements become siloed in high-tech industries, with limited societal or economic impact.

Key Features

Breakthroughs in AI primarily benefit tech companies, startups, and niche markets.

Traditional sectors lag behind, missing opportunities to improve productivity or services.

Weak alignment between industry innovation and public policy leads to fragmented progress.

Outcomes

Economic Growth: Moderate growth concentrated in specific industries.

Reputation: Viewed as a leader in innovation but disconnected from broader economic needs.

Risks: Widening gaps between tech leaders and traditional sectors exacerbate inequality and economic divides.

Future 3: Coordinated Innovation Ecosystem (Government-Driven, Rapid Adoption)

Description

The government takes a leading role in driving AI development, creating a cohesive strategy that integrates regulatory frameworks and incentives for adoption. Traditional sectors respond positively, integrating AI to enhance productivity and address societal challenges.

Key Features

Government policies ensure alignment between innovation and public interest, emphasizing ethical and sustainable AI.

AI adoption is incentivized in traditional sectors through subsidies, training programs, and public-private partnerships.

Robust governance frameworks minimize risks like bias, misuse, and monopolistic behavior.

Outcomes

Economic Growth: Balanced and inclusive growth across sectors.

Reputation: Canada is recognized as a global leader in coordinated and responsible AI innovation.

Risks Mitigated: Ethical concerns and adoption barriers are addressed effectively.

Future 4: Bureaucratic Stagnation (Government-Driven, Resistance to Change)

Description

The government dominates AI development but struggles to implement effective policies for adoption in traditional sectors. Bureaucracy, inefficiency, and lack of industry collaboration stifle progress, leading to stagnation.

Key Features

Regulatory frameworks are overly restrictive, discouraging industry-led innovation.

Traditional sectors resist AI due to high compliance costs, lack of flexibility, or misaligned incentives.

Government programs fail to address the practical needs of businesses and consumers.

Outcomes

Economic Growth: Minimal growth due to slow adoption and stifled innovation.

Reputation: Perceived as overly cautious and outpaced by global competitors.

Risks: Economic and technological stagnation, reduced competitiveness, and public dissatisfaction.

Canada’s Most Likely AI Future

Based on Canada’s current trajectory, as outlined in the Canadian Sovereign AI Compute Strategy, the most likely future is Future 1: Agile Innovator Economy, characterized by industry-driven leadership and rapid adoption of AI. This reflects the government’s prioritization of infrastructure investment over regulatory frameworks, fostering innovation through minimal oversight and enabling traditional sectors to quickly integrate AI solutions. While this approach supports economic growth and competitiveness, it carries risks of ethical lapses and misalignment with global standards.

Indicators Supporting Future 1

Industry-Driven Focus

The Canadian Sovereign AI Compute Strategy prioritizes infrastructure and accessibility, creating immediate opportunities for private-sector innovation.

The government’s shift away from legislation (e.g., AIDA delays) indicates a reliance on industry self-regulation and voluntary codes of conduct, leaving innovation largely in the hands of private actors.

Rapid Adoption Trends

Traditional sectors like healthcare, agriculture, and energy are increasingly seeking AI-driven efficiencies to remain competitive.

Canadian businesses are motivated to adopt AI solutions to keep pace with global competition, particularly as the government subsidizes infrastructure through the compute strategy.

Minimal Regulation

The absence of a comprehensive regulatory framework (e.g., AIDA) creates an environment where industry can innovate freely, albeit with risks of ethical lapses.

This hands-off approach encourages faster adoption but leaves gaps in oversight and alignment with societal values.

Economic Drivers

Government investments in compute capacity focus on short-term economic gains and innovation, rather than holistic, long-term governance.

Rapid adoption is seen as a way to attract foreign investment and retain talent, key goals of the Sovereign AI Compute Strategy.

Risks and Challenges of Future 1

While this scenario aligns with the current trajectory, it carries risks:

Ethical and Governance Gaps: The lack of strong oversight could lead to bias, misuse, or unethical AI practices, damaging public trust.

Short-Term Thinking: Rapid adoption without sustainability or inclusivity could create long-term economic and social disparities.

Global Misalignment: Canada may fall behind in aligning with international AI standards (e.g., the EU’s AI Act), complicating trade and collaboration.

What Could Shift the Trajectory?

The future might lean toward Future 3: Coordinated Innovation Ecosystem (Government-Driven Leadership, Rapid Adoption) if Canada:

Reintroduces AIDA: Developing a robust AI regulatory framework could balance innovation with ethical oversight.

Strengthens Public-Private Collaboration: Increased alignment between government policies and industry innovation could reduce risks and encourage widespread adoption.

Adopts Global Standards: Synchronizing with international AI governance frameworks could enhance Canada’s reputation and ensure interoperability.

Without significant changes in governance priorities, Future 1: Agile Innovator Economy is the most likely outcome for Canada. While it promises rapid economic growth and innovation, addressing ethical, environmental, and social concerns will be critical to sustain long-term success. If the government takes a more active leadership role, there is potential to transition toward Future 3, which offers a more balanced and sustainable trajectory.