Moving Beyond Experimentation in Autonomous Warfare

Israel’s Strategic Edge

As militaries around the world debate and experiment with the role of AI in future conflicts, Israel is already integrating these technologies into active operations. At the recent Tel Aviv Defense-Tech Summit, Eyal Zamir, Director General of Israel’s Defence Ministry, shared a vision that reflects more than aspirations - it underscores action.

"In a decade, AI-based autonomous systems will lead combat operations across land, sea, and air, reshaping how wars are fought. We must integrate these capabilities now to remain efficient and save lives."

This statement isn’t speculative. It’s grounded in Israel’s current trajectory, where autonomous systems are being deployed and refined in live scenarios. While other nations test concepts in controlled environments, Israel is advancing through direct engagement, gaining insights that others can only hypothesize about.

What Makes This Significant?

Most militaries remain in a phase of experimentation, cautiously exploring the potential of AI and autonomy. Israel’s approach is different: it prioritizes implementation and iteration in real-world conditions. This allows for:

Operational Feedback: Lessons learned in active use feed directly into refining these systems.

Capability Integration: Autonomous technologies aren’t isolated tools; they’re embedding into broader strategies across domains.

Strategic Agility: By acting now, Israel positions itself to shape the standards and expectations of autonomous warfare before they fully materialize.

What’s Next for Autonomous Systems?

Israel’s proactive integration of autonomous systems forces a critical question for other nations:

How does the gap between experimentation and active integration affect global power dynamics?

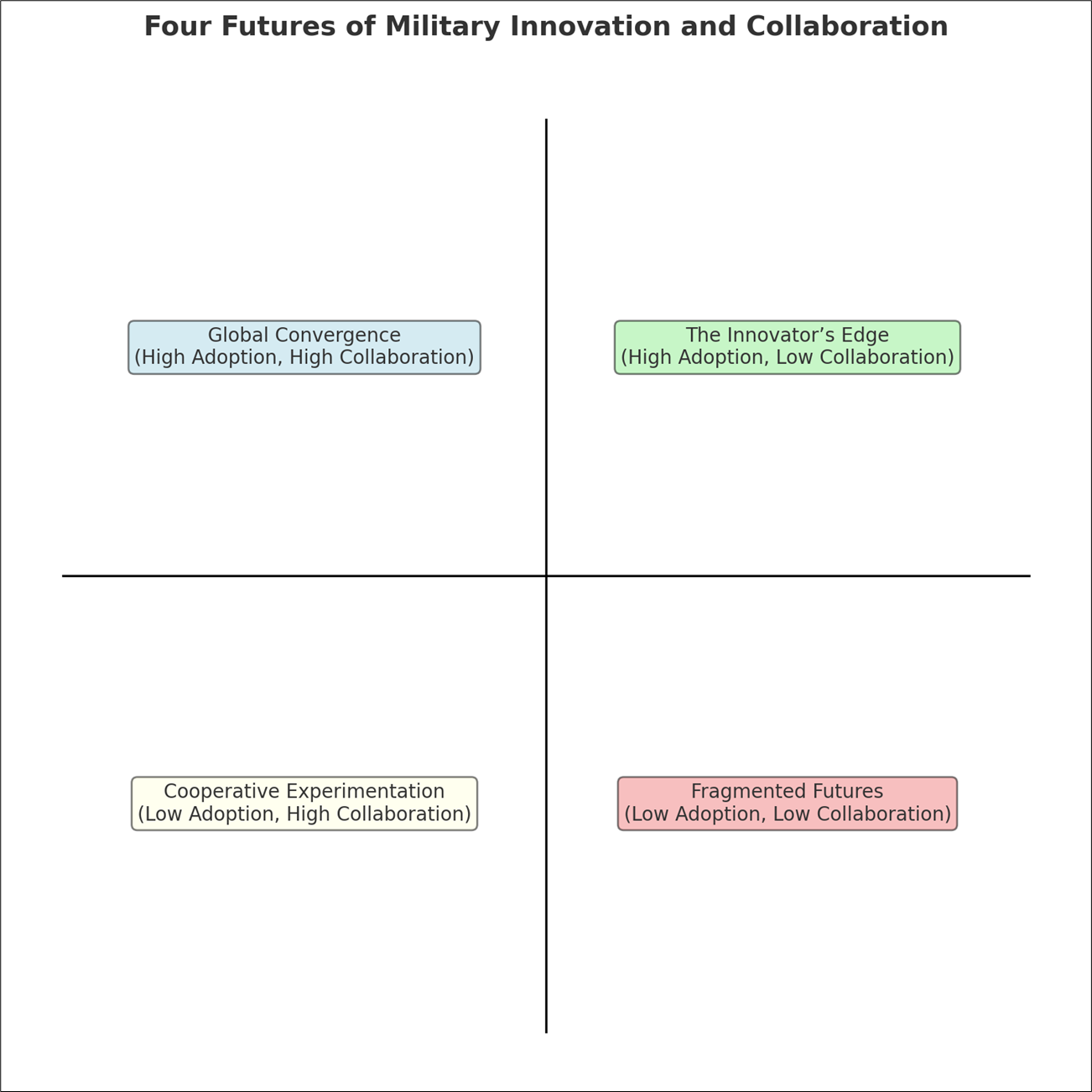

To explore this, we analyzed four potential futures based on the interplay of AI adoption rates and levels of collaboration.

The image above visualizes four futures for military innovation and collaboration:

The Innovator’s Edge (High Adoption, Low Collaboration):

Leading nations dominate, setting operational norms for others to follow. Slower adopters risk dependence on alliances or third-party technologies.Global Convergence (High Adoption, High Collaboration):

International bodies establish equitable standards, allowing slower adopters to benefit from shared R&D and ethical frameworks.Fragmented Futures (Low Adoption, Low Collaboration):

Uneven development creates regional conflicts, with dominant players dictating terms and slower adopters resorting to asymmetrical tactics.Cooperative Experimentation (Low Adoption, High Collaboration):

A deliberate, collaborative approach fosters shared learning, enabling slower adopters to catch up while building global consensus.

Which Future Will Shape Military AI Innovation?

Here’s what we uncovered.

Key Factors Shaping the Future

1. Rate of AI Adoption

The global military AI market is rapidly expanding, with projections doubling in size between 2024 and 2025.

Advanced militaries are planning to integrate AI into their operations by 2030, signaling a clear push toward widespread adoption.

2. Levels of Collaboration

There are promising examples of allied cooperation, like partnerships among nations to co-develop AI technologies.

However, rivalries between major powers, such as the US and China, reveal limited collaboration and a focus on unilateral dominance.

3. Geopolitical Tensions

Competition between leading powers often stifles the ability to create global standards for military AI, adding to the complexity of collaboration.

4. Resource Disparities

Smaller nations face challenges in developing advanced AI capabilities, often relying on alliances or external providers to keep pace.

The Most Likely Scenario: The Innovator’s Edge

Based on current trends, the future most likely resembles The Innovator’s Edge:

High Adoption, Low Collaboration

Leading nations are rapidly integrating AI into their military strategies while setting operational and ethical norms. Slower adopters are left reliant on alliances or external technologies, which risks widening the gap in capabilities.

Why It Matters

For Leading Nations: They will define the benchmarks for how AI is used in warfare, consolidating power and influence.

For Slower Adopters: Without significant investment or partnerships, they risk dependency and losing autonomy in critical defence capabilities.

For Global Stability: As the gap between leaders and followers grows, so does the potential for geopolitical tension, with dominant nations shaping global security norms.

What Can Be Done?

To navigate this trajectory, stakeholders could:

Encourage Collaboration: Leading nations should actively support the creation of ethical AI frameworks and shared standards.

Support Capacity Building: Help slower adopters develop the expertise and resources to integrate AI effectively.

Monitor Geopolitical Risks: Ensure that advancements in AI do not exacerbate tensions or lead to destabilization.

The Ripple Effects of Autonomous Warfare Strategy

Israel’s active integration of autonomous systems is more than a technological leap- it is a strategic shift that forces other nations to confront the pace and purpose of their own military AI adoption. As foresight practitioners, we must look beyond the immediate implications on the battlefield and ask deeper questions:

How will the disparity between active integration and experimentation reshape alliances and rivalries?

What unintended consequences might arise when one nation’s tactical decisions set global precedents for AI in combat?

Can slower adopters mitigate their vulnerabilities, or will they be locked into dependency cycles that redefine global power structures?

Israel’s approach demonstrates that the future of warfare is not merely about technology but about the strategic agility to act decisively. For the rest of the world, the challenge lies in adapting quickly enough to participate in shaping this reality and not merely reacting to it.