The Future of Expertise

Is AI making us dumber?

This is the debate I’m watching rise lately:

→ “AI is taking away critical thinking.”

→ “We’re going to lose expertise.”

Every time it comes up, someone points to the latest MIT study — so let’s start there:

The MIT Study (and what it shows)

54 students wrote essays — in 3 groups:

1️⃣ LLM only (ChatGPT)

2️⃣ Search engine only

3️⃣ Brain only (no tools)

They measured brain activity (EEG), quality of essays, ownership, and memory.

Key results:

LLM group showed reduced brain connectivity — meaning lower cognitive engagement during writing.

LLM users had much worse memory of what they wrote — couldn’t quote their own essay minutes later.

LLM users felt less ownership of their writing.

When LLM users were asked to write without AI again, their brain engagement was still low — they had “cognitive debt.”

Search engine users stayed more cognitively active — looking for sources still forced their brain to work.

Brain-only users had highest cognitive engagement and recall — they thought harder, remembered more, and had more original writing.

Conclusion? If you let AI do the thinking for you, you will think less.

That’s an important finding — but it is not the whole story.

And yet — it’s being quoted everywhere right now as “proof” that AI will erode human expertise.

Here’s the issue:

That’s not how advanced AI users are working.

That’s not what’s happening in real field practice.

The pattern is older than AI.

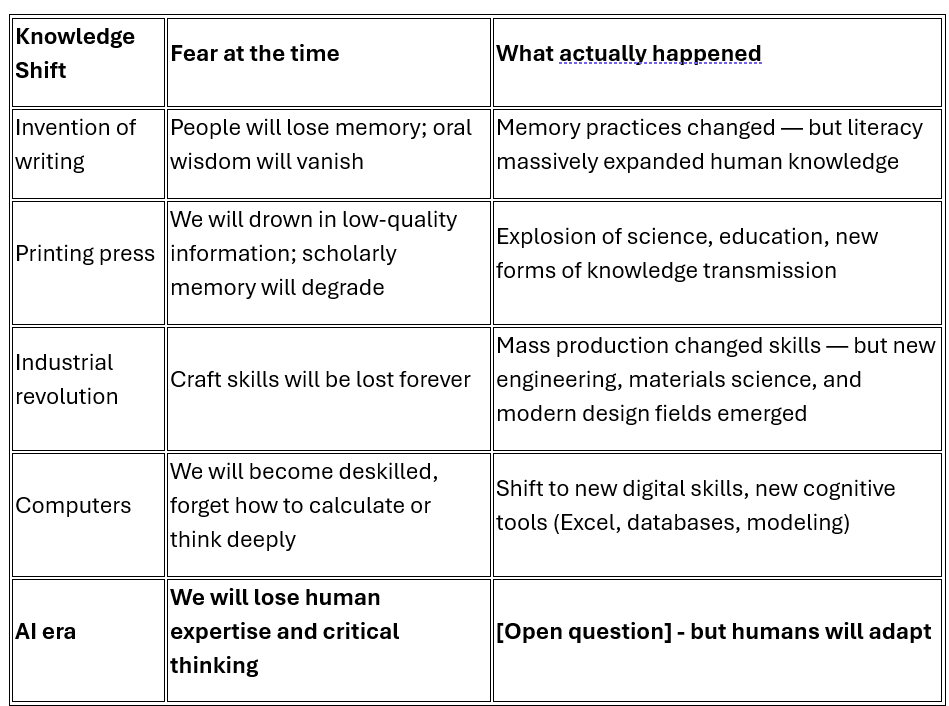

Every major knowledge shift has triggered fear:

The risk is real — but it’s not inevitable.

Yes — if people use AI passively (like the students in the MIT study), cognitive offloading happens.

Critical thinking declines.

Expertise degrades.

But that’s not the only path.

We are already seeing something else emerge:

New Meta-Expertise — what’s happening

In leading-edge practice —new forms of expertise take shape:

Meta-Skills:

1️⃣ AI Literacy — knowing model strengths, gaps, risks

2️⃣ Systems Thinking — navigating complex, dynamic relationships

3️⃣ Orchestration — blending human and AI reasoning for stronger outcomes

4️⃣ Critical Inquiry — asking sharper, more catalytic questions

5️⃣ Adaptive Learning — continuously evolving one’s expertise as AI evolves

New Critical Thinking Patterns:

→ AI-Literate Skepticism — knowing what to trust, and when to probe

→ Layered Reasoning — testing multiple explanations in parallel

→ Reflexive Inquiry — surfacing your own blind spots using AI feedback

→ Meta-Questioning — shaping inquiry to unlock deeper understanding

→ Collaborative Criticality — reasoning in human + AI + human loops, not solo

Why this matters

Expertise is not disappearing.

It is evolving — fast.

In the next 5–10 years, the most valuable thinkers will not be those with the deepest archives of knowledge in their heads.

They will be those learning to:

→ Frame better questions

→ Navigate shifting knowledge domains

→ Blend human and machine insight

→ Think with AI, not just use it

→ Lead collective reasoning loops — across humans and agents

→ Adapt continuously — as tools, models, and systems evolve

→ Orchestrate multi-agent intelligence — human and machine — for strategic outcomes

→ Shape how AI influences real-world decisions, not just accept its outputs

→ Build new forms of institutional intelligence — beyond individual expertise

These capabilities are only starting to take shape now.

They are not yet widespread.

But they may define the next layer of human expertise — and the edge of competitive advantage.